Natural language processing applications are having their long-awaited cultural moment. Unfortunately, the spike of the interest was not caused by some aesthetic and conceptual breakthroughs but rather due to rampart malicious use of the technology.

The phenomenon known as "fake news" is the next step in the development of the information warfare tools and it is getting more and more sophisticated as time goes by. In fact, it becomes so sophisticated it becomes hard to distinguish the text written by an algorithm from a text written by a living breathing human being.

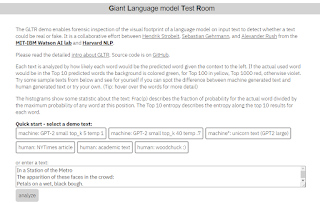

That's the reason Giant Language model Test Room aka GLTR exists.

GLTR is a natural language processing text analysis tool developed in collaboration by Hendrik Strobelt of MIT-IBM Watson AI Lab and Sebastian Gehrmann of Harvard NLP. The purpose of the project is plain and simple - to detect algorithmically-generated texts and expose the ways these texts are constructed. GLTR is supposed to serve as a conceptual foundation for bigger and better tools that will turn the tide of malicious use of NLP and present a viable set of tools for an effective offense.

While fake news are currently getting the most attention of all "bad NLP" applications, it is not the only facet of the phenomenon. Far from it.

There is a lot of fake content on the internet. And you see it all the time. Think about how many times you were looking for something on the web only to stumble upon a piece of text that had a perfectly fitting headline and was hitting all the right notes while also being a whole lotta nothing? Well, some of it is written by human beings, while some of it is procedurally generated. The latter becomes more and more prevalent.

With the development of NLP technology, its capability of producing plausible-looking text is becoming more and more cost-effective way of flooding the web with a tailor-made content designed to make its mark on the search engine rankings.

The key thing is that this kind of content hits "all the rights notes" of the search algorithms. It is generic AKA perfectly fine. And so its gets visible enough in the search ranking to attract significant traffic.

Despite all the improvements of Google and Youtube search algorithms, it is still can be taken advantage of relatively easily. In late 2018 New York Magazine had published a good thinkpiece on the subject. Among other things, it states that less than 60% of web traffic is human and the rest are bots and bots and bots. There are numerous faux websites designed to drive fake traffic and create fictional trends to manipulate public opinion.

Traditionally, these tactics were used by marketers of all walks of life to drive digital advertisement traffic and generate sweet profit. Now it is being used by foreign governments and extremists as a mean of information warfare to manipulate public opinion and generate uproar.

And it works because of the way people consume information online. It is much more impulsive and driven by momentary stimulation. As it is - it is not even about the content itself but rather the impact of its surface that reinforces certain opinion and fuels it. Combined with the echo chamber effect that tends to happen in the recommendation-based newsfeeds - it becomes a blunt weapon of manipulation.

How does GLTR is fending off fake news?

In order to expose algorithmically-generated texts, GLTR language model was trained on the same datasets as possible malicious NLP applications. In this particular case, it is GPT-2 language model from OpenAI as it is currently one of the most efficient publicly available models. GPT-2 is somewhat infamous for its blatant scaremongering with "indistinguishable from human-like texts" in press releases. In reality it is really good at generating cute unicorn fiction.

The key technology of the project is a predictive language model.

Here's how it works. Natural language processing works on pattern recognition. The texts are written in a certain way, there is logic to it. Predictive language model is trained on these patterns. The understanding of the patterns makes the model capable of generating logically plausible strings of words based on the input context.

Basically, the model constructs texts like a puzzle tiles based on generic language patterns. If done right, this kind of a model can be extremely efficient at producing serviceable texts that don't immediately scream "algorithmically-generated". In the majority of the cases, you wouldn't even pay attention to these texts that much as they are probably way too bland to make an impact in the intense stream of the information oversaturation.

The thing is that these texts are too bland for its own good. GLTR modus operandi goes like this: it takes an input text and analyzes how GPT-2 model would have predicted its construction. The output consists of a ranking of possibilities. Some of them might be generic thus probably procedurally- generated, while the others seem to be more "unpredictable".

The results is presented in a color-coded markdown layout. The green parts are the ones most likely to be generated by GPT-2, it is its top 10. Yellow and red are top 100 and top 1000 respectively. The rest of the text, the less predictable or unique stuff is designated purple.

Here's how human-written text looks like:

Here's how procedurally-generated text looks like:

As you can see, Ezra Pound's little poem is splattered with all available colors, while the bunch of paragraphs about something-something are so green you start hallucinating Miles Davis' "Green" composition slowed down to a gentle rumbling rustle.

***

However, that's not the only way of using this tool.

The way it visualizes its analysis can be reinterpreted as an instrument for turning textual pieces into visual poetry.

GLTR ranks the probability of predicting the position of the word in the text and highlights the words with four key colors.

If you use GLTR on the traditionally written text like general-purpose evergreen texts like articles on the company's blog or top 10 tips for essay writing or some mainstream narrative-based opinion pieces - you get more or less even spread of the colors. Lots of green and some of yellow-red splashes with the burps of purple. Grammarly optimization usually makes the text even more generic smoothie in that regard.

However, if you use GLTR on an unconventionally composed text, something like poetry - you might get a visual treat like nothing else.

Here's why - poetry is unconventional by design. It is supposed to be different from the casual uses of language - it explores the aesthetic qualities of language - and as such it tends to go places. This includes slight and not so much manipulations, substitutions, displacement, rearrangement, reiteration of context.

Given the fact that context is the primary navigation tool for the predictive algorithms - poetry spells a literal nightmare for it. The algorithm can't comprehend the context that is "off" the model's standards and instead it tries to assess like every other text. The results are colorful.

Here's my take:

This text was composed out of edited out lines from the other texts that were left in the clipboard history combined with the thesaurus descriptions of the words arranged and rewritten into a vague impressionist narattive. Its context is hard to dig into without connecting the nebulous dots (something the algorithm is incapable of doing). Because of that, the color layout is drastically different from the casual text.

Here's another poem:

GLTR color ranking adds an intangible layer to the text. Even if the reader doesn't know the specific designations of the colors in the context of GLTR, each person got its own associations for the said colors. These associations enrich the text. It might be some kind of emotion or sound or tone or anything. The color layout creates a sub-narrative of shifting notions that can't be expressed by words.

It is utterly pointless and backwards to the core purpose of the tool but why not if you can. After all, no one said you can't have fun with it.

Підписатися на:

Дописати коментарі (Atom)

DeepSeek doesn't about Ginger Baker making tea song either

So i did it again but this time i asked DeepSeek the same question. The following text is the edited version of the conversation. I really l...

-

The Angriest Dog in the World — is a comic strip by american film director David Lynch. It is about the dog that is very-very angry becau...

-

I was thinking about verbless poetry recently. I saw Ezra Pound's "In the Station of the Metro" in the newsfeed and then i...

-

Censorship sucks. No two ways about it. The whole thing about "you can't show this and that because reasons" is ridiculous and...

Немає коментарів:

Дописати коментар